🇫🇷 Français

🇬🇧 English: I Keep Counting – How we did it?

I keep counting – Behind the music

Algomus is a research team in Computer Music in the Université de Lille, with strong links with the Université de Picardie Jules Verne in Amiens.

Our research interest is mostly the analysis of music and notably its structure, harmony, rhythm, and texture. Participating in the ``AI Song Contest’’ was thus for us a challenge from both the creative and the technical point of view: In a very short time-frame, we had to adapt our own simple models, or experiment existing ones, to a related yet still unfamiliar task, music generation through AI. Needless to say, we were very much excited by this new challenge and were eager to jump in.

The song we propose is called I Keep Counting. We experimented a co-creative approach in writing this song, %– namely in writing its structure, chords, lyrics, melody, and intro hook – that is, we both used AI generation and human composition. We openly detail this process here and in the report we are preparing. We were very happy to collaborate with Niam, a young artist and a student in the Université de Lille, who sang I Keep Counting with her warm voice, underlining the universal message of the lyrics.

Participating in this contest opened our eyes to many points on models for music generation but also for music analysis. This experience raised many thoughts on the relationship between creativity and machine. The AI surprised us: Besides the insightful lyrics on the vanity of counting, the models generated an uncommon succession of sections as well as an unexpected chord sequence in the chorus. Working with this material was thus a stimulus for human creativity… and it was fun. Also, in the end, we got a great ear-worm: I keep counting, I keep counting, na na na, na na… We and our families kept humming the tune for the last two weeks!

Do we want to replace musicians with AI?

While we did use a lot of automated processes, we never intended to have a fully 100% just-push-a-button muic. Why is that so? Aside the fact that full automated composition is still a very challenging research problem, to our eyes it would take all the art (and the fun !) away. We like to imagine the AI assisting rather than substituting the composer.

As musicians ourselves, our goal was therefore to explore the co-creativity between the AI and the human. During the creative process, we often found ourselves facing with the same very old question of creative freedom vs. artificial constraints, as explained below.

How did we do it ?

Several approaches have been introduced to generate music or musical material. Many of them now imply deep learning (Roy 2017, Pachet 2019). Adding structure is a key challenge in music generation (Herremans 2017, Medeot 2019, Zhou 2019).

Our co-creativity approach can be resumed with three words: model, generate, and compose.

We model some musical element, based on data to which we have access and on previous research. These models are used to generate new data. The first batch of results was often not good enough so we started an iterative process, fixing some bolts and screws in our models. We had then an act of composing, as a musician, who has to select between original chord patterns, lyrics, and melodies that he can fluently create in his/her mind. What does s-he choose? We manually filtered out the AI-generated material. We see this filtering process as a typical task of co-creativity and we must acknowledge that we found these selecting steps as much fun and stimulating as a real composition process from scratch. To balance this human intervention, we tried as much as possible to keep open different paths and to choose among them by rolling the dice.

As a team working on structure – most of the time from the analytical point of view – we decided to start from the song structure. Once this was modeled and generated, we added all the other elements: harmony, lyrics, melody, instrumental hooks. We thus generated a lead-sheet that we successively typeset in Musescore and that you can now see at the top of the page!

Structure

Model. SALAMI (Smith 2011) is a great dataset with 2000+ structures, including 400+ pop titles. We mapped these structures to the 6 labels of the Eurovision dataset: intro, verse, bridge, pre-chorus, chorus, and hook/instrumental. The training and the generation were done by a random walk on a first-order Markov Model learning the succession of sections. We constrained the model to generate structures containing between 5 and 10 sections, with at least 4 repeated sections.

Generate and compose. The model generated 20 structures. We rejected the structures that did not inspire us and we were left with 11 candidates out of the initial 20, marked from S1 to S11. Then we rolled the dice: The result was the structure S8, [intro, chorus, verse, bridge, verse, chorus, bridge, chorus, hook]. That was definitely not our favorite candidate, as it didn’t seem natural to us, especially because of the bridge linking either two verses or two choruses. Nevertheless, we decided to keep it, seeing in that original structure a stimulating challenge!

We also know that better models could be trained that take into account more context but finally, we were happy that the structure we rolled had some unexpected turns. Indeed, S8 is somehow more “creative” than some very conventional structure like [intro, verse, chorus, verse, chorus, hook, chorus, hook].

Chords

Model. There are been several works in the MIR community on chord sequence generation, including recent works with grammars or deep learning (Conklin 2018, Huang 2016, Paiement 2005, Rohrmeier 2011…). A problem we had to face with chord generation is that it needs to deal with a large vocabulary: In other words, there are thousands of possible chords. Since we don’t have here a huge amount of data to train – 200+ songs, Eurovision MIDI (as provided by the organizers) – we have to simplify this vocabulary. We transposed all songs to C major or A minor and encoded each chord under two separate but related axes: the pitch class of the chord root (12 possibilities) and the quality of the chord (major triad, minor triad, minor seventh chord, …), as estimated by music21 (Cuthbert 2010). We used an LSTM neural network to analyze and generate chord sequences thus encoded.

Generate and compose. The results of our model are here. Our model and the generation process can definitely be improved to get more common results (some of the generations are barely tonal), but we nevertheless like how the sequences were sometimes “relevant” and sometimes not.

As for the structure, we hand-picked a few chord sequences (13 out of 50 in total) and chose among them by rolling the dice. We obtained the following chord sequences:

- Intro: C Gm7 A Cm7 C A

- Chorus: Am Em Gm Cm B♭m Cm D Fnc

- Verse: C D F#Maj7 Gnc

- Bridge: Em D A C?

- Hook: C F Dm Fm

We eventually decided to take for the Intro the same chords than the Hook, with a C pedal, and to swap the chords of the Bridge and the Verse (because the F#Maj7 G seemed to us such a nice candidate for the bridge). We also decided to keep “strange” chords that the AI suggested, such as B♭m in the chorus and the F$\sharp$Maj7 in the bridge, but we gathered the 4 chords with this “strange” B♭m chord in a single measure, ending the chorus on the bright D chord.

But the story of this strange measure is not finished yet. We realized one week before the submission that we made an error in our piano part, a B♮ instead of B♭ for the G minor, and kept this B♮ over all the Gm (Cm B♭m Cm) measure. Note that we worked on the song for two weeks before realizing we made a mistake! It is known that musicians sometimes auto-correct things (Sloboda 1984). When we finally realized it, many parts were finished, including the recording of the vocal harmonies sung by Niam that would need to change. We decided, in the end, to keep this artifact as a sign of how strange things could happen in a creative process.

Lyrics

Model, Generate. Could the spirit of the lyrics of a Eurovision song be captured by an AI model? We tested an extreme position: can Eurovision songs convey insightful messages with… only two words ? We thus listed all pairs of words (bi-grams) in Eurovision songs, focusing on nominal groups and complete sentences. Guess what? The most comon pairs are my heart and my love ;-)

You can already recognize the embryo of the lyrics of I Keep Counting! Wishing that some text may emerge from these bi-grams, we used them as an input seed for the GPT-2 model. The first generation gave us already the full text that you can find in the homepage.

Try to understand? We liked the repetitiveness of the generated text. Repetitiveness is typically undesirable in prose, but actually quite musical and appropriate to the lyrics of a song (or even a poem). Then, there is a common thread of counting something (time, maybe?). The singer changes their opinion on counting: first, they sound quite hopeless (I stop counting, it will never end) but then something happens – maybe the love story hinted at in the first verses? The singer gathers the strength to keep counting down the years. All this despite the endless war going on each day between mine and yours (slightly ungrammatical, but understandable) because the singer knows that their significant other at least will come, no matter what.

Well, of course, this is only one interpretation, trying to put some meaning into words that might look at first glance quite impenetrable – and, honestly, many of us do not understand anything on these lyrics ;-)

Melodies

There are many recent approaches to melody generation, most of them using machine learning (Shin 2017, Tardon 2019, Yang 2017, Zhu 2018…) But lyric-to-melody, harmonic progressions, rhythm… These are all challenging research subjects and we did not have the time to delve in. So, for the chorus, we just gathered around the table, played the chords, and hummed along until we got to a simple and catchy melody. For the verses, we did not really come up with a melody, we just chose its rhythm to be pulse-like, as you can hear in the recording.

We took more liberty with the intro hook. We wanted to create a hook that could be recognizably composed by the AI, even if the generation would use a very simple model.

Model. We used a (no-longer available) dataset of 10,000 melodies of common practice period coming from A Dictionary of Musical Themes by Barlow. We discarded all themes in a minor key and transposed everything else to C major, the key of the hook. We made two statistics: one on the note durations and the other on the interval between notes and tonic. We used these distributions to sample sequences of notes with a total duration of 8 measures, the length of the hook. This model disregards all internal music structure as well as the voicing, but we believed it would be a good baseline. We were wrong, but not too much.

We changed the octaves of all the notes to be between the F# below and the F above the central C. It worked like a charm. As the learning dataset was on classical music, often played at a slower tempo with somewhat complex melodies, the notes were too short. We multiplied all note durations by 2 and brought the minimal generated duration to a quaver.

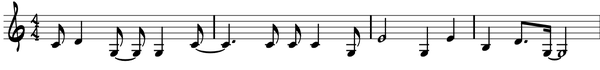

Generate, Compose. We generated 20 hooks, and… selected the hook that we liked the most! As the rest of the song was already established, there was not too much space for randomness or ``excessive creativity’’. By chance, it was the first one produced by the final model (here the first 4 measures, we decided\human to loop twice over them):

You can have a look on two more examples, one thats sounds also quite good, and another one that we really didn’t like. With our sampling, it’s expected that diatonic tones, and especially those on the tonic and dominant chords notes of the triad (C/E/G/B/D) play a more significant role, as can be seen in the examples above. This follows the known patterns of pitch profiles. However, the generated melodies included other notes as well.

Music arrangement and production

Music arrangement, orchestration and mixing are here human! We recognize that the biggest human intervention here is the global arrangement plan: To underline the structure that was generated by the AI, we collectively decided how to introduce the additional tracks and the alternance of singing voices in the various sections in order to bring global contrasts and tension progression through the song. We chose the tempo at 128 bpm, a very common bpm…. and a power of 2, which made our computer scientist souls happy.

Instrumental tracks. The piano is a hand-made voicing of the generated chord sequences with occasional additional non-chord notes. At the end of the song, it is duplicated with an arpeggiator effect. A bass line and string line were also composed by the human arrangers. The bass generally respects the root note of the chords and adds some straight fills between chords and between sections, that evolve along the sections of the song. The string line also underlines some sections and transitions. The rendering of these tracks was done in Logic Pro X (LPX) with some basic virtual instruments (VSTi): by order of appereance, African Kalimba, Subby Bass, Yamaha Grand Piano, Modern Strings, and Pad. A percussion track and a drum track was added by using the \emph{Drummer} component. (loops Darcy - Retro Rock and Quincy - Studio)

Voices. Niam is the only human musician performing the song. A part of the verse melody (notably the end of the Verse 2) was semi-improvised by her during the recording session. Xe chose to alternate the lead singing on verses between Niam’s recording and a synthetic singing voice produced with the plugin Emvoice. As a third singing source, the LPX vocoder Evoc was used on the second bridge to morph the spoken lyrics ``it’s ending’’ on the chords. Finally, we choose to double and pitch shift the voice of Niam on the last chorus to produce a lower harmonic voice.

Mixing and mastering. Frédéric Pecqueur of Studio C&P did the mixing and mastering. We tried to keep the all parts involving human intervention as discrete as possible in the final mix.